Installation

git clone https://github.com/ElektorLabs/CaptureCount.git

cd CaptureCount/yolo

wget https://pjreddie.com/media/files/yolov3.weights

sudo apt install python3-opencv

sudo apt install python3-numpy python3-pandasCode

Largement inspiré de https://github.com/ElektorLabs/CaptureCount

# coding: utf-8

# Working Object Tracker V1.2, adapted from https://www.learnopencv.com/deep-learning-based-object-detection-using-yolov3-with-opencv-python-c/

# This script will detect objects in a picture

import cv2

import pandas as pd

import time

from datetime import datetime

import numpy as np

# Load pre-trained model and configuration

model = './yolo/yolov3.weights' # Change this to your model's path

config = './yolo/yolov3.cfg' # Change this to your config file's path

net = cv2.dnn.readNetFromDarknet(config, model)

# Load class names

classes = []

with open("./yolo/coco.names", "r") as f: # Change to the path of your coco.names file

classes = [line.strip() for line in f.readlines()]

# Function to get output layers

def get_output_layers(net):

layer_names = net.getLayerNames()

return [layer_names[i - 1] for i in net.getUnconnectedOutLayers().flatten()]

# Function to draw bounding box

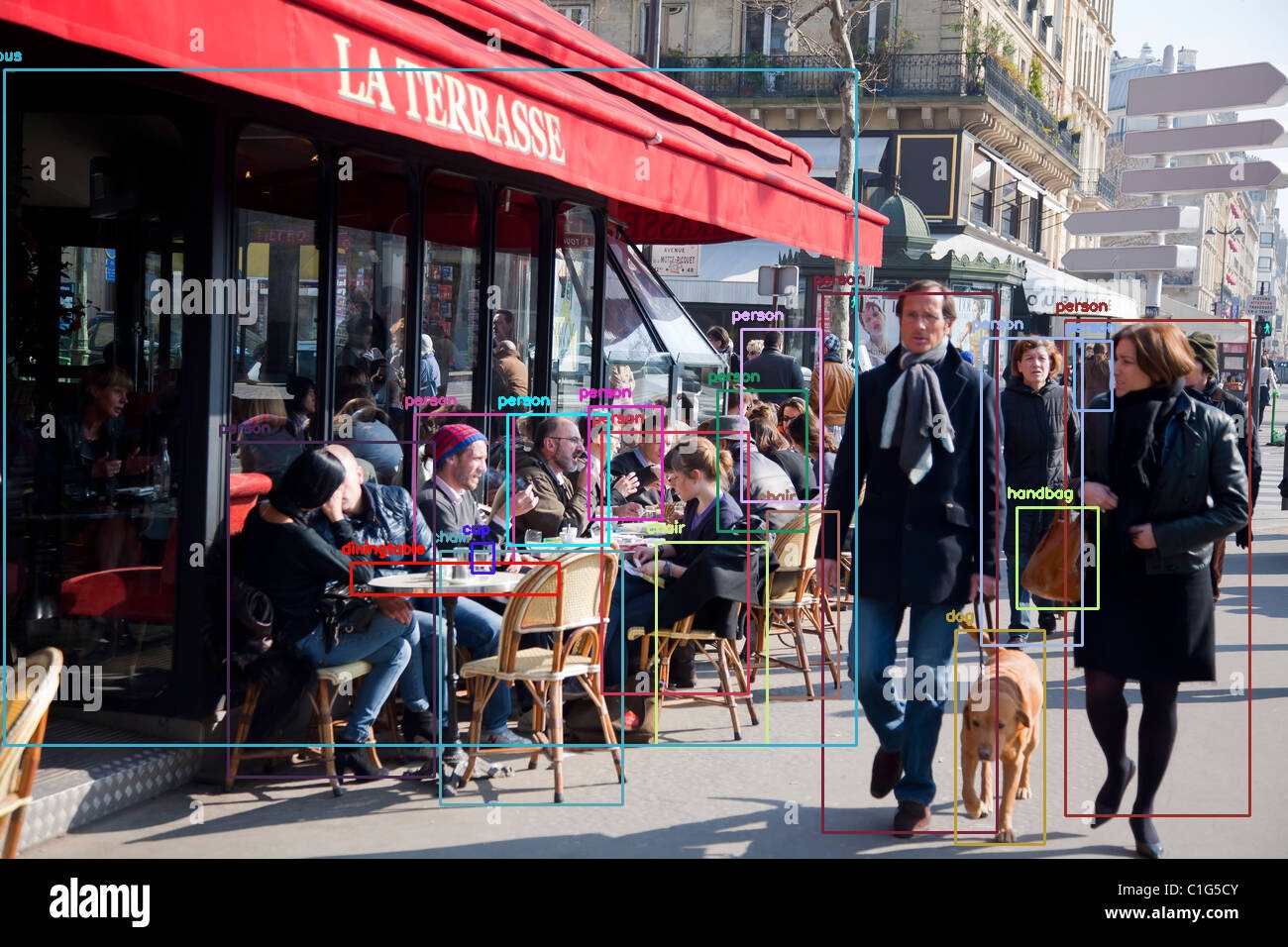

def draw_bounding_box(img, class_id, confidence, x, y, x_plus_w, y_plus_h):

label = str(classes[class_id])

color = np.random.uniform(0, 255, size=(3,))

cv2.rectangle(img, (x, y), (x_plus_w, y_plus_h), color, 2)

cv2.putText(img, label, (x-10, y-10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, color, 2)

frame = cv2.imread('chat.png')

Width = frame.shape[1]

Height = frame.shape[0]

scale = 0.00392

# Create a blob and pass it through the model

print("Creating a blob...")

blob = cv2.dnn.blobFromImage(frame, scale, (416, 416), (0, 0, 0), True, crop=False)

net.setInput(blob)

outs = net.forward(get_output_layers(net))

print("Blob created.")

# Process the outputs

class_ids = []

confidences = []

boxes = []

conf_threshold = 0.5

nms_threshold = 0.4

for out in outs:

for detection in out:

scores = detection[5:]

class_id = np.argmax(scores)

confidence = scores[class_id]

if confidence > conf_threshold:

center_x = int(detection[0] * Width)

center_y = int(detection[1] * Height)

w = int(detection[2] * Width)

h = int(detection[3] * Height)

x = center_x - w / 2

y = center_y - h / 2

class_ids.append(class_id)

confidences.append(float(confidence))

boxes.append([x, y, w, h])

indices = cv2.dnn.NMSBoxes(boxes, confidences, conf_threshold, nms_threshold)

for i in indices:

box = boxes[i]

x = box[0]

y = box[1]

w = box[2]

h = box[3]

draw_bounding_box(frame, class_ids[i], confidences[i], round(x), round(y), round(x+w), round(y+h))

print("Box",i,"added.")

cv2.imwrite('chat.cv.png',frame)Avant/Après